Below you can find information regarding data sets that our research group has released or can provide upon request:

YCBInEOAT Dataset

Due to the lack of suitable dataset about RGBD-based 6D pose tracking in robotic manipulation, a novel dataset is developed. It has these key attributes:

- Real manipulation tasks

- 3 kinds of end-effectors

- 5 YCB objects

- 9 videos for evaluation, 7449 RGBD in total

- Ground-truth poses annotated for each frame

- Forward-kinematics recorded

- Camera extrinsic parameters calibrated

![]()

Object Pose Estimation In Adaptive Hands Dataset

Many manipulation tasks, such as placement or within-hand manipulation, require the object’s pose relative to a robot hand. The task is difficult when the hand significantly occludes the object. It is especially hard for adaptive hands, for which it is not easy to detect the finger’s configuration.

- real world data (986 samples) : Groundtruth 6D pose.

- synthetic data (12000 samples) :

- Groundtruth 6D pose of everything including every finger.

- Groundtruth semantic segmentation of every link of the hand and object.

- Additional rendered images of objects under the same pose but not occluded by hand.

- object and hand CAD models, computed PPF features.

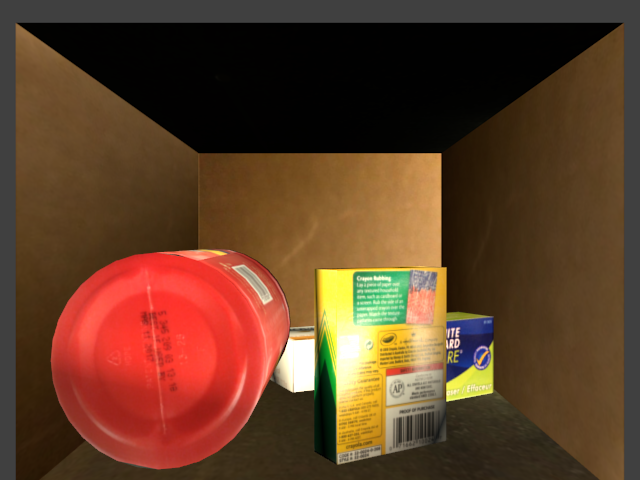

Rutgers APC RGB-D Dataset

To better equip the research community in evaluating and improving robotic perception solutions for warehouse picking challenges, the PRACSYS lab at Rutgers University provides a new rich RGB-D data set for warehouse picking and software for utilizing it. The dataset contains 10,368 depth and RGB registered images, complete with hand-annotated 6DOF poses for 24 of the Amazon Picking Challenge (APC) objects (mead_index_cards excluded). Also provided are 3D mesh models of the 25 APC objects, which may be used for training of recognition algorithms.

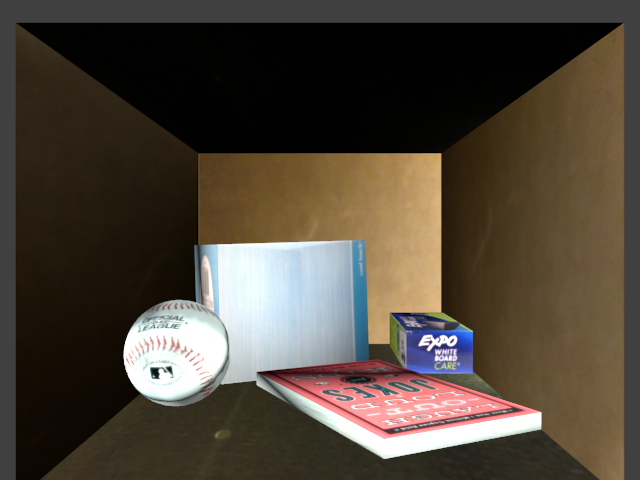

Rutgers Extended RGBD dataset

Dataset download link: download

For each scene in the dataset, we share:

- RGB Image

- Depth Image

- Segmentation mask

- Parameters

- camera_pose: pose of the camera in a global frame.

- camera_intrinsics: intrinsic parameters of the camera.

- rest_surface: pose of the resting surface such as a table or shelf bin.

- dependency_order: physical and visual dependency of objects upon each other.

- pose: ground-truth object pose in a global frame.

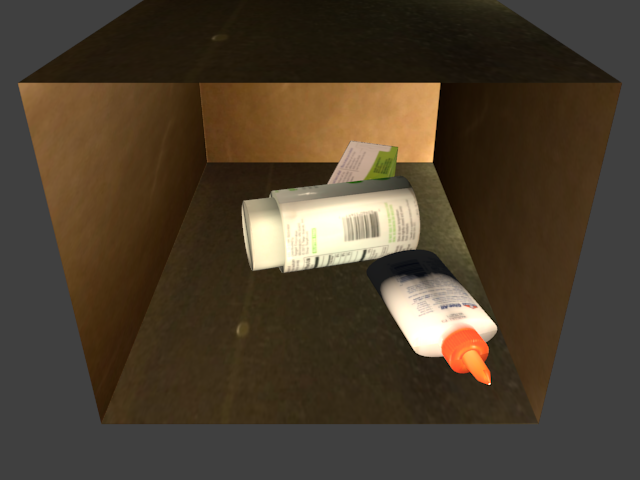

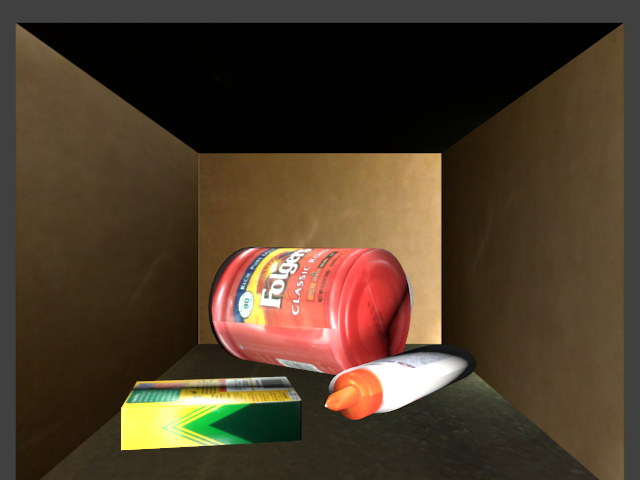

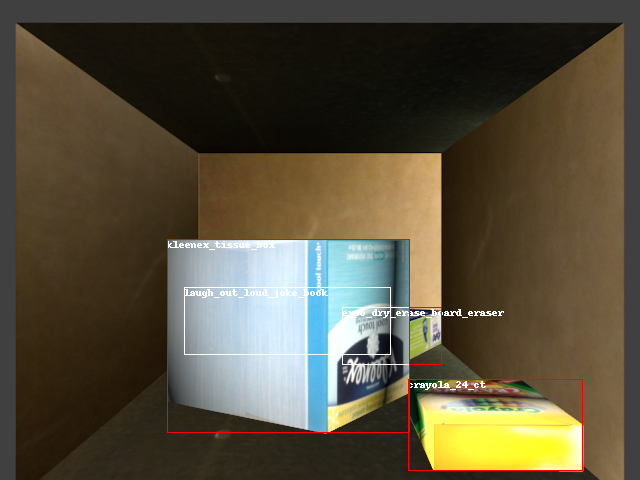

Examples of scenes in the dataset and results of pose estimation with physics-based reasoning.

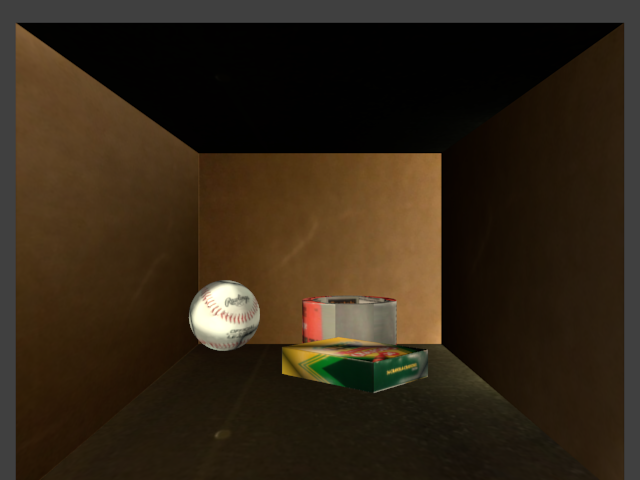

Autonomous data generation to train CNNs for object segmentation

The training dataset is generated by physical simulation of the setup in which the robot operates. The tool we developed for autonomous data generation, labeling and training is shared below.

Dataset Generation toolbox: https://github.com/cmitash/physim-dataset-generator

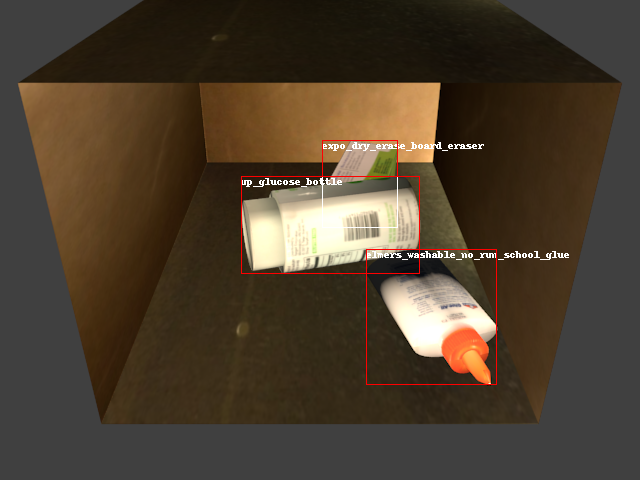

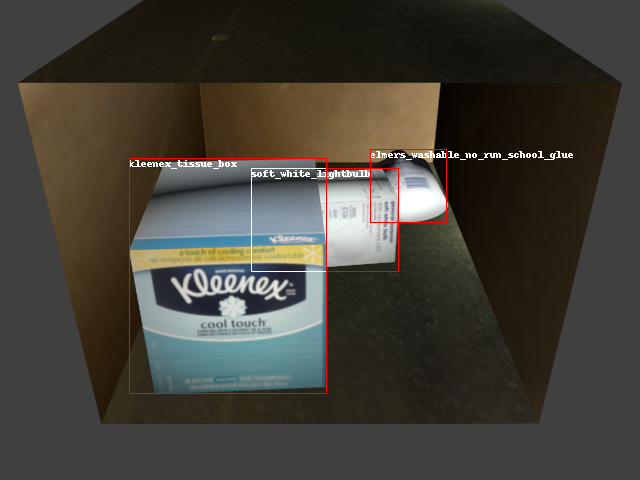

Examples of scenes generated by the toolbox

Learnt models for object segmentation

Faster-RCNN (VGG16) Physics Simulation + Self Learning (Shelf): download

Faster-RCNN (VGG16) Physics Simulation + Self Learning (Table-top): download

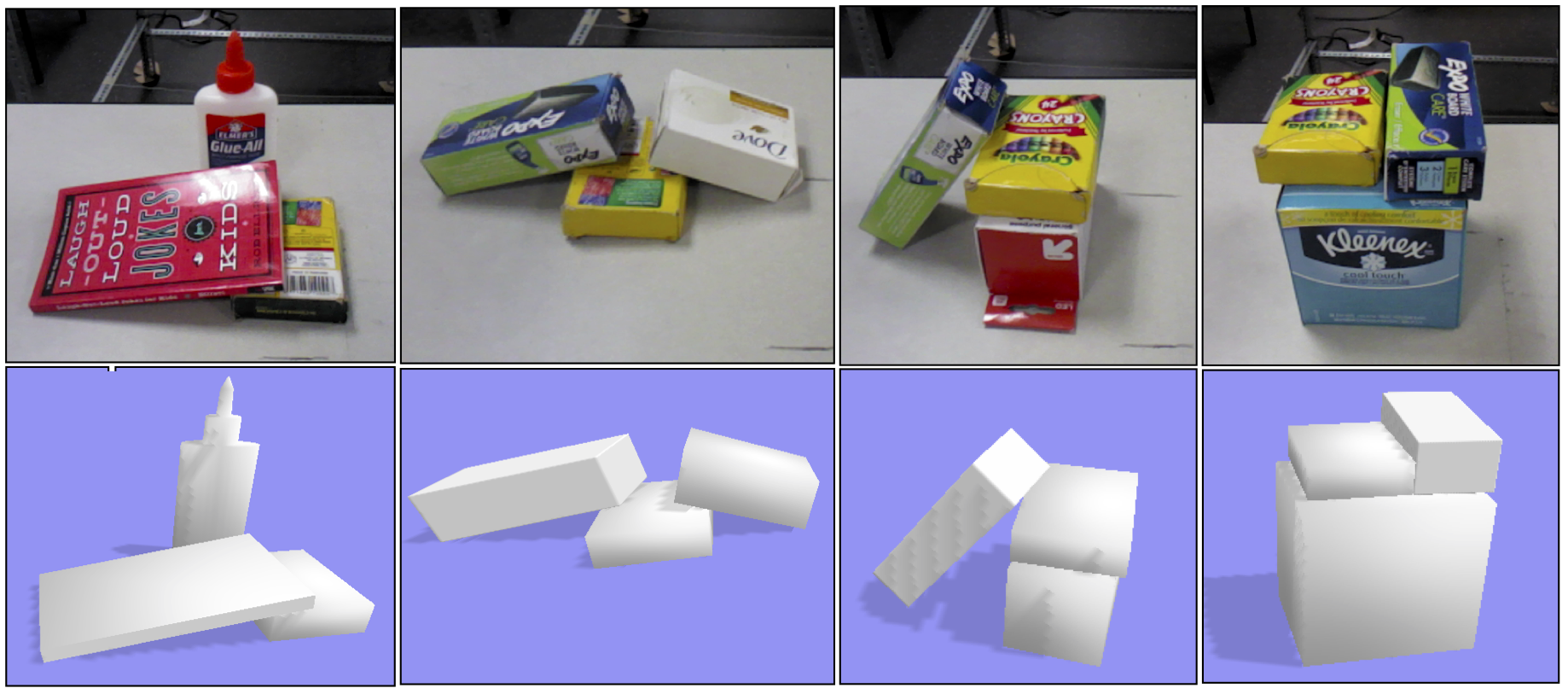

6D pose hypotheses dataset for manipulation planning in table setup

The 6D pose hypotheses dataset for manipulation planning is generated by

(1) Image taking via an Azure Kinetic camera

(2) A fully convolutional neural network which returns object classification and segmentation probability maps

(2) A geometric model matching process which returns pose hypotheses for each detected object

Examples of scenes (The 1st row shows the RGB images and the 2nd row shows the visualization of pose hypotheses in physics simulator PyBullet)

The dataset can be referenced here.